App Store A/B Testing: How to Get Real Results from Your Experiments

Your app store listing is your app’s digital storefront. Just like landing pages, even small tweaks can create significant lifts or major setbacks. That's why structured A/B testing on screenshots, icons, metadata, and videos is one of the highest-impact activities for app growth teams.

But effective testing isn’t just about running experiments; it’s about structuring, tracking, and analyzing them properly.

Avoid These Common Pitfalls

Before diving into best practices, let's highlight what typically ruins a good test:

- Lack of a Clear Hypothesis: "Trying something new" isn’t enough.

- Multiple Changes at Once: Changing visuals, messaging, and layout simultaneously makes insights unclear.

- Ending Tests Prematurely: Statistical significance requires patience, tests should run at least 2 weeks.

Always calculate:

- Minimum Detectable Effect (MDE): The smallest meaningful uplift you aim for.

- Sample Size: The number of installs or impressions needed to reach a valid conclusion.

Rule of thumb: Calculate the required sample size with your baseline conversion rate and estimated MDE using online tools such as this.

How to Structure an Effective A/B Test

Start with a precise hypothesis:

If we [change X], we expect [result Y] from [audience Z] because [reason].

Examples:

- "If screenshot #2 leads with social proof, we expect a higher conversion rate in Tier 1 markets due to increased trust."

- "If we highlight dark mode UI first, we anticipate a better tap-through rate among Gen Z users because of alignment with design preferences."

Next, create your test variants:

- V1 (Control): Current live version

- V2 (Variant): Introduce one significant change

Maintain consistency in other elements (color, layout, copy tone).

Key Metrics to Track:

- Sample size

- Conversion rate (CVR)

- Statistical significance

- Potential seasonal or campaign traffic overlaps

Platform-Specific Testing Guidelines

Apple (Product Page Optimization - PPO)

- Managed through App Store Connect

- Allows up to 3 variants

- Tests typically run for 14–21 days

- Minimum 100,000 impressions per variant recommended

- Only visual elements tested (screenshots, icons, app preview videos), no metadata changes

Google Play (Store Listing Experiments)

- Uses downloads rather than impressions

- Supports testing of short & long descriptions, screenshots, and icon

- Watch out for traffic contamination (Google Ads or external sources)

Post-Test Analysis: Extracting Actionable Insights

When a test variant wins:

- Determine if success was due to improved clarity, emotional appeal, or better alignment with user intent.

- Document findings clearly for reference in future tests.

If the test is neutral:

- Valuable insight is still gained and understanding what doesn’t move the needle is equally important.

Log every result systematically in your Creative Backlog or ASO Knowledge Base. This ensures continuous learning and actionable insights across your team.

Every A/B test should fuel a meaningful learning loop, moving beyond vanity metrics to drive genuine growth.

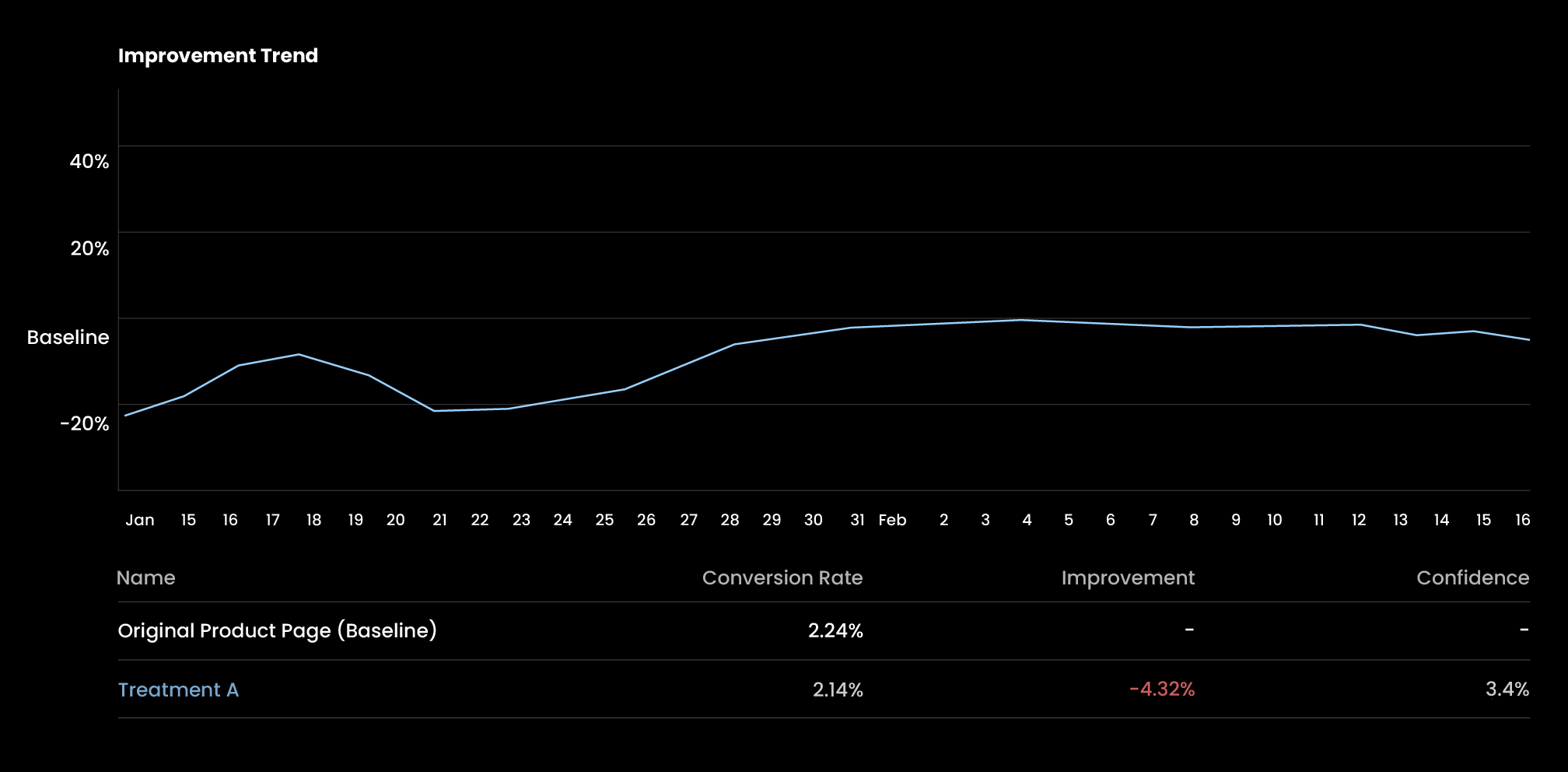

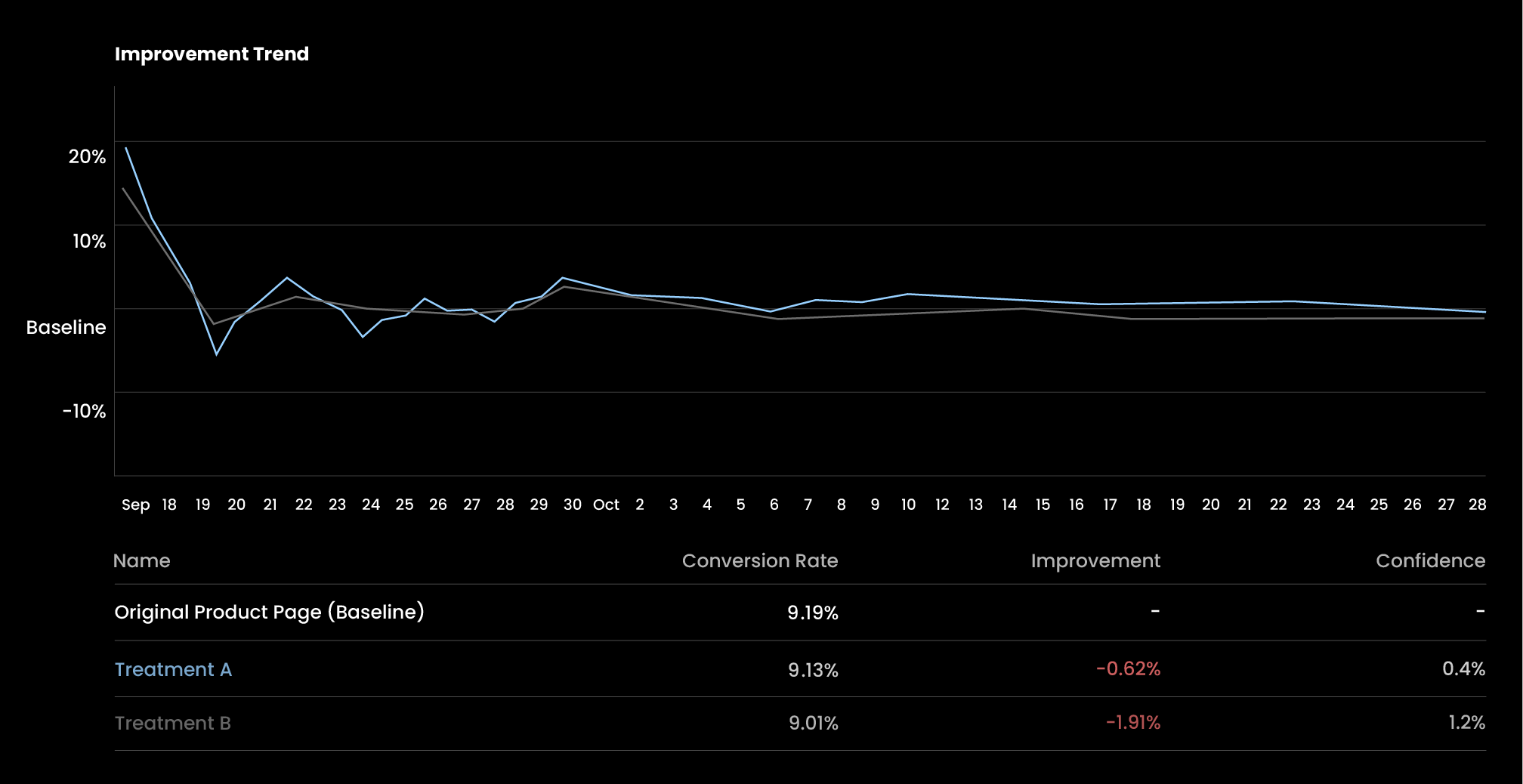

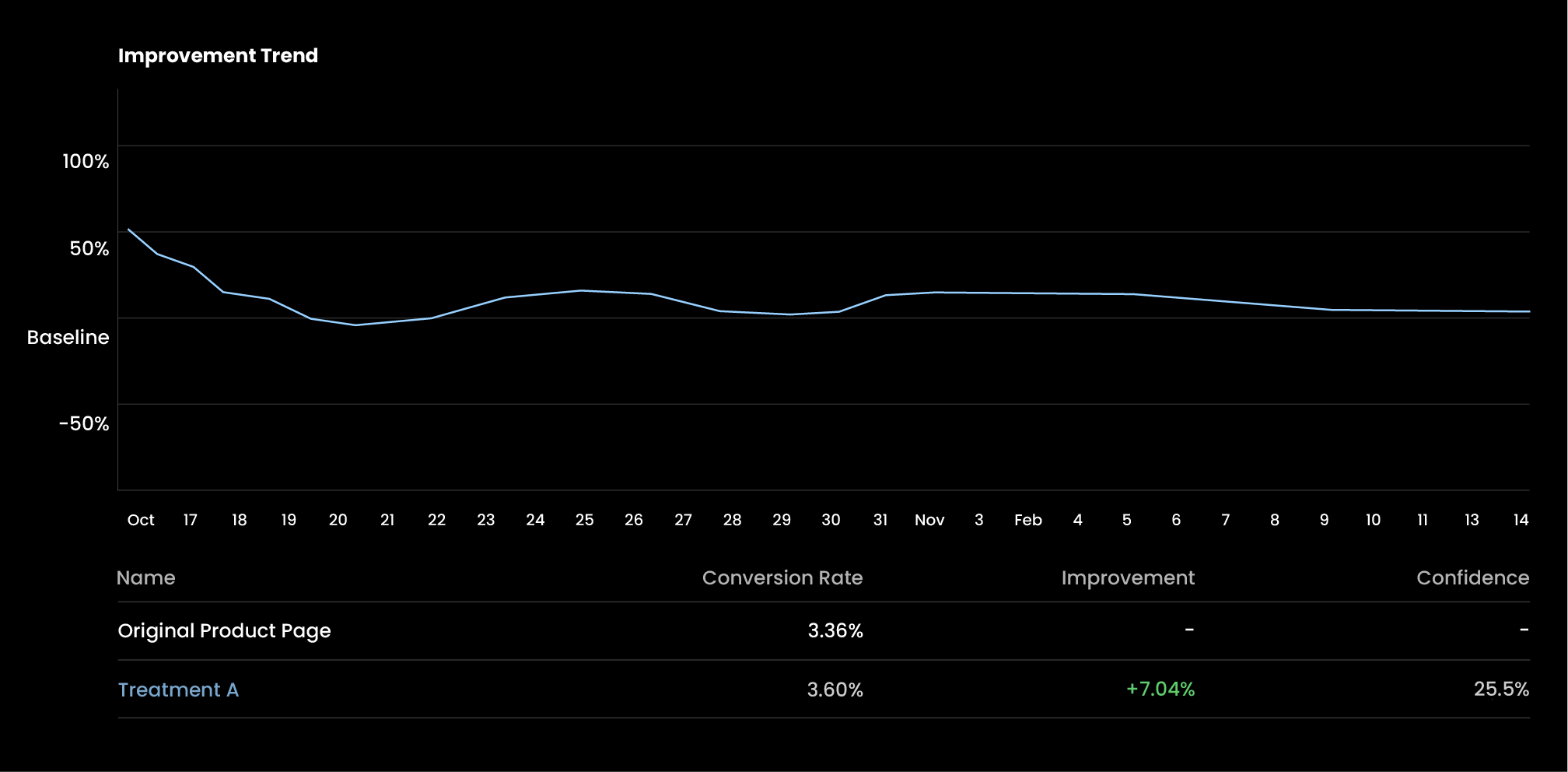

It’s important to note that for small to mid-sized apps, using native A/B testing tools on the App Store and Google Play can come with limitations, particularly in terms of customization and depth of result analysis. While these tools offer basic testing capabilities, they may not always yield large or statistically significant outcomes for smaller apps.

However, it’s still entirely feasible to run tests using these platforms. With a systematic approach and proper post-test evaluation (e.g., sequential analysis), even smaller-scale experiments can contribute to meaningful CVR (conversion rate) optimization, and be handled with a professional-level mindset.

So... Should You Keep That Variant or Not?

Sometimes tools say “winner”, but your gut says “meh.”

Here’s a practical framework we use to decide:

P.S. Don’t forget to track your conversion rates after applying any test, and if possible, conduct a before-and-after analysis to better understand the impact of the changes.

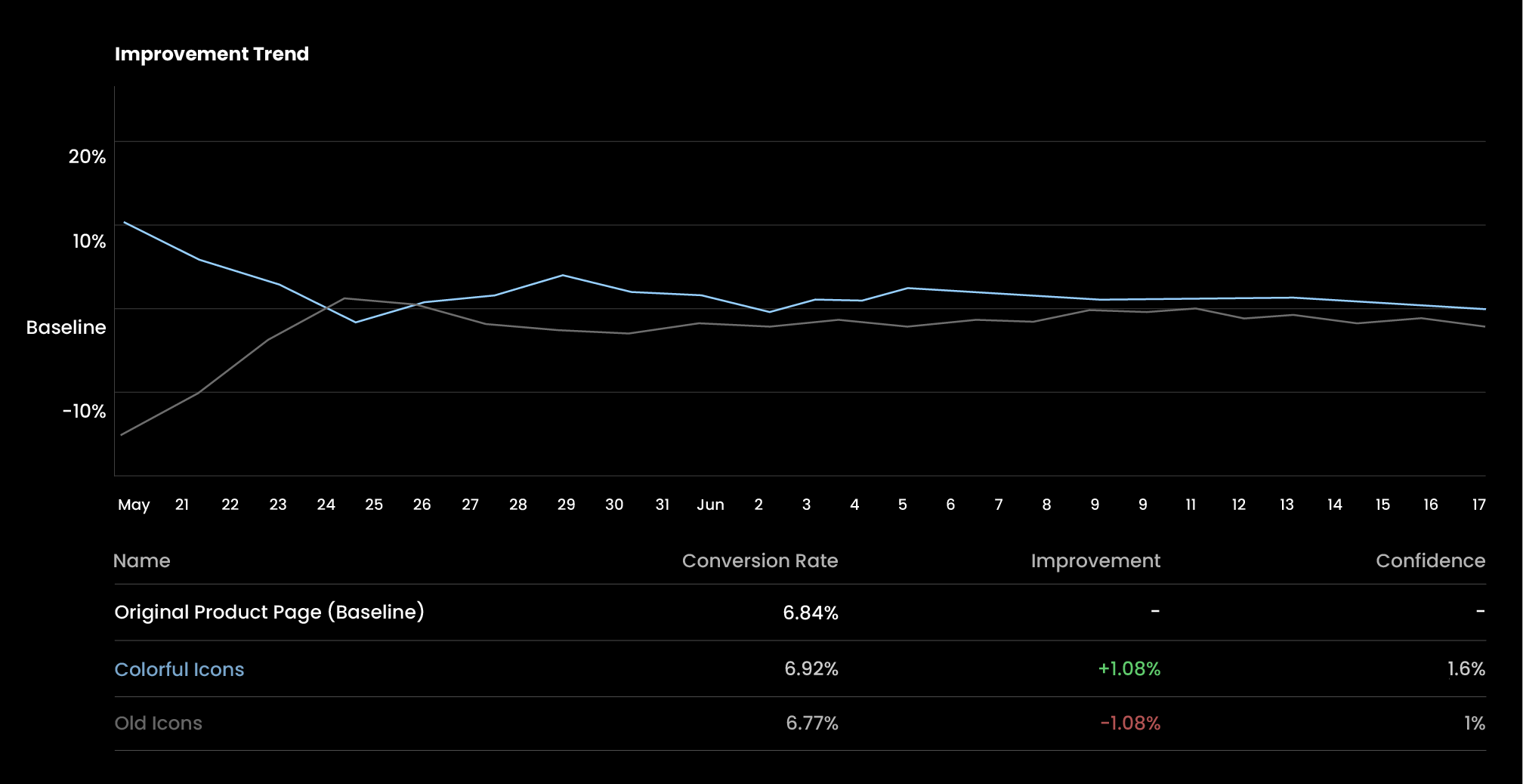

Don't apply the variant if...

It consistently underperforms the control.

It fluctuates with no clear trend or significance.

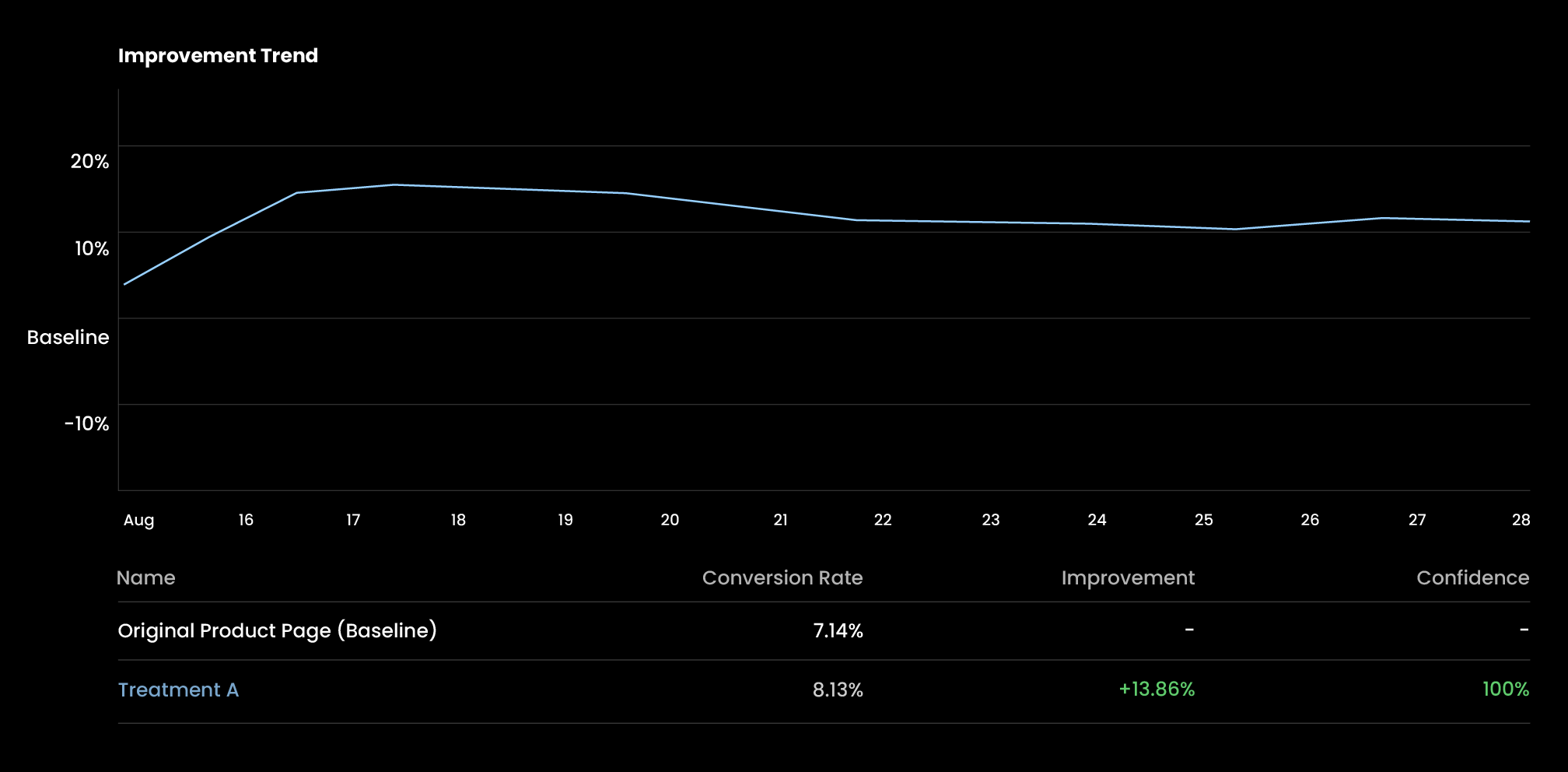

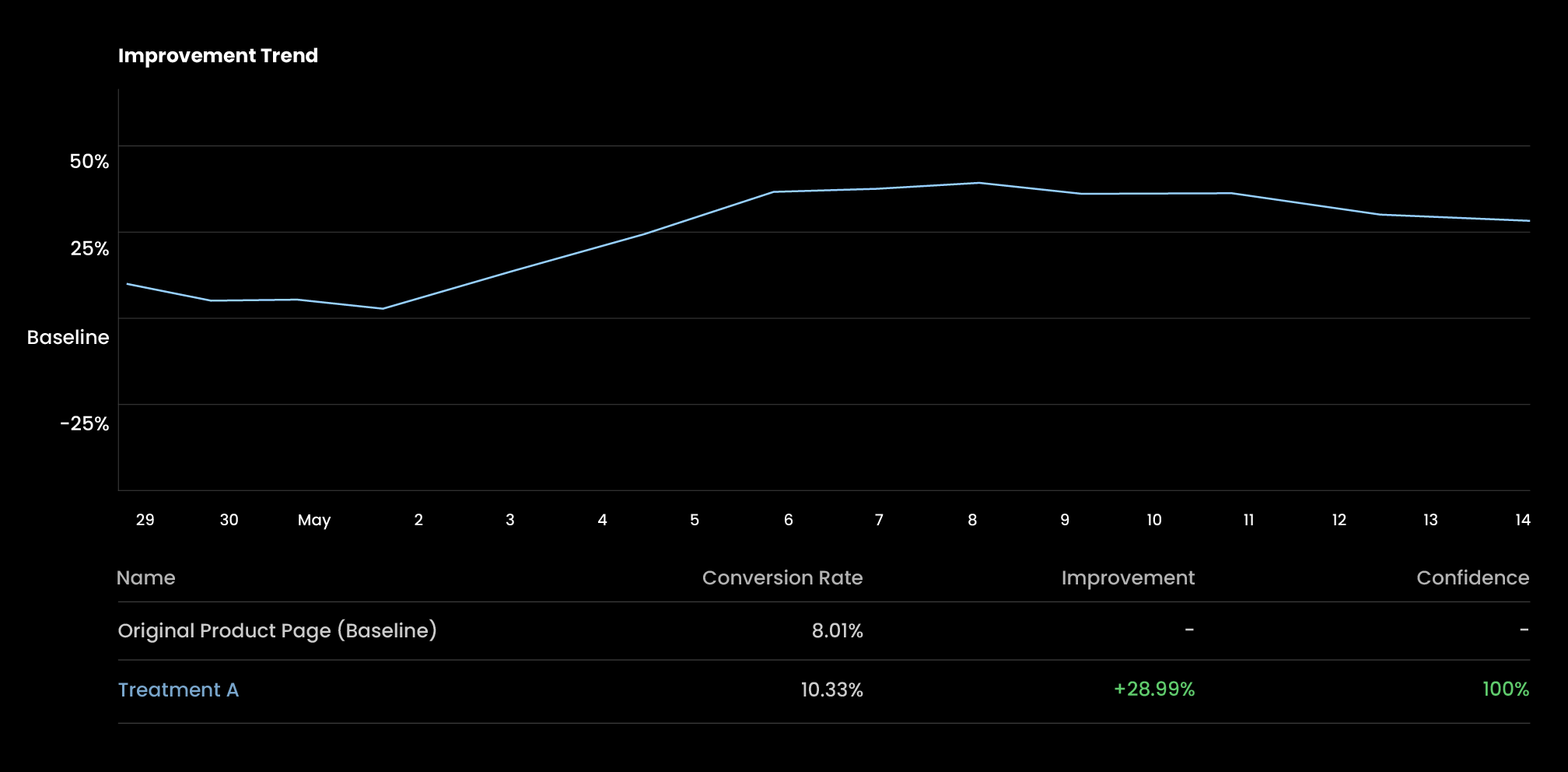

Apply the variant if...

It shows consistent positive uplift, even if it’s not fully significant.

It hits 100% confidence and shows a clear CVR gain.

Every A/B Test Should Fuel a Learning Loop

Structured A/B testing isn’t just about finding a winning screenshot; it’s about building a system of continuous learning and conversion lift.

Run smarter tests. Learn faster. Scale what works.

Want a faster way to decide?

Book your App Store A/B Testing Setup Session and turn guesswork into data-driven growth.